Modeling Extremely Large Images with xT

By Ritwik Gupta, Shufan Li, Tyler Zhu, Jitendra Malik, Trevor Darrell, Karttikeya Mangalam

As computer vision researchers, we believe that every pixel can tell a story. However, there seems to be a writer’s block settling into the field when it comes to dealing with large images. Large images are no longer rare—the cameras we carry in our pockets and those orbiting our planet snap pictures so big and detailed that they stretch our current best models and hardware to their breaking points when handling them. Generally, we face a quadratic increase in memory usage as a function of image size.

Today, we make one of two sub-optimal choices when handling large images: down-sampling or cropping. These two methods incur significant losses in the amount of information and context present in an image. We take another look at these approaches and introduce $x$T, a new framework to model large images end-to-end on contemporary GPUs while effectively aggregating global context with local details.

Why Bother with Big Images Anyway?

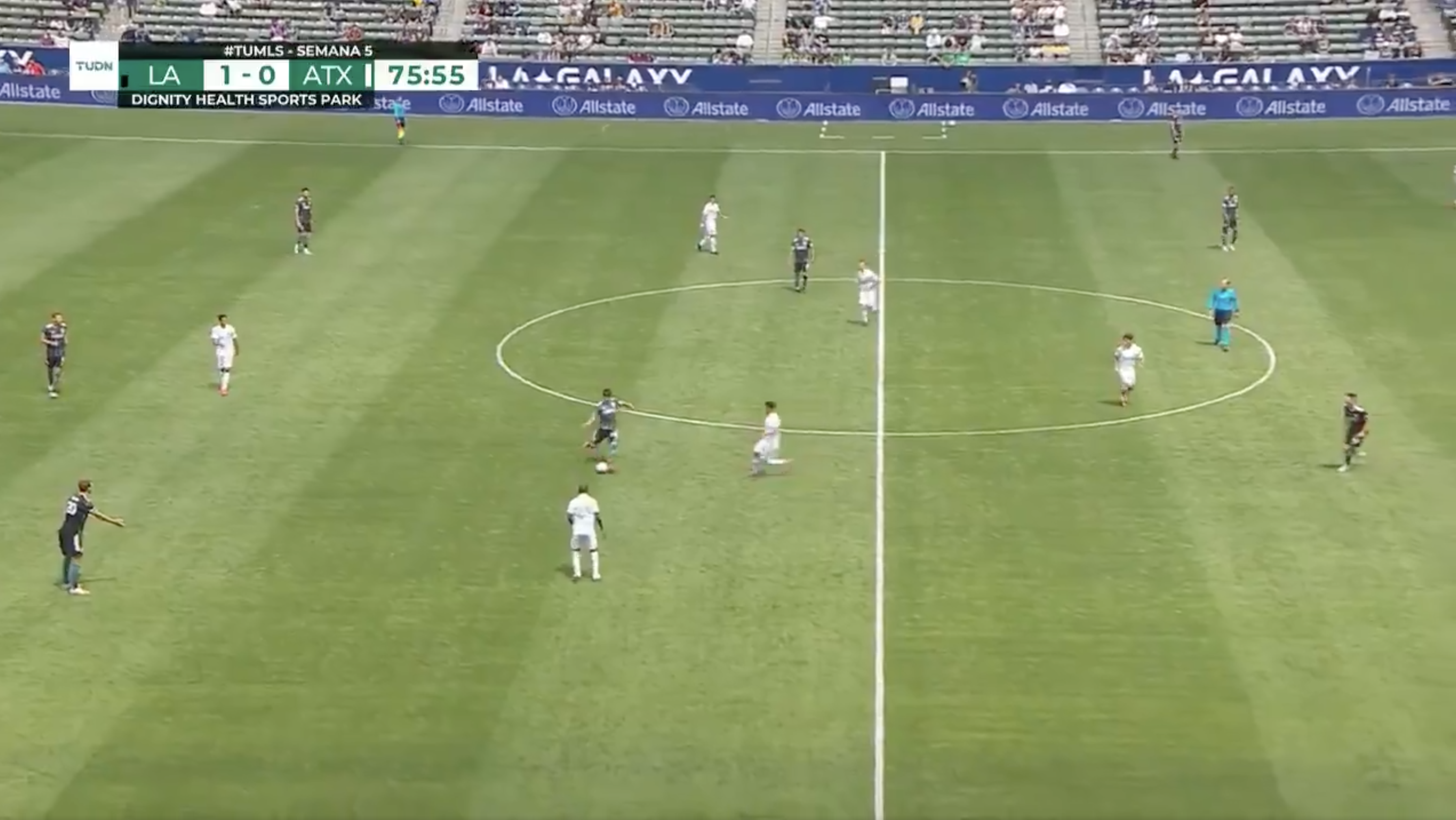

Why bother handling large images anyways? Picture yourself in front of your TV, watching your favorite football team. The field is dotted with players all over with action occurring only on a small portion of the screen at a time. Would you be satisified, however, if you could only see a small region around where the ball currently was? Alternatively, would you be satisified watching the game in low resolution? Every pixel tells a story, no matter how far apart they are. This is true in all domains from your TV screen to a pathologist viewing a gigapixel slide to diagnose tiny patches of cancer. These images are treasure troves of information. If we can’t fully explore the wealth because our tools can’t handle the map, what’s the point?

That’s precisely where the frustration lies today. The bigger the image, the more we need to simultaneously zoom out to see the whole picture and zoom in for the nitty-gritty details, making it a challenge to grasp both the forest and the trees simultaneously. Most current methods force a choice between losing sight of the forest or missing the trees, and neither option is great.

How $x$T Tries to Fix This

Imagine trying to solve a massive jigsaw puzzle. Instead of tackling the whole thing at once, which would be overwhelming, you start with smaller sections, get a good look at each piece, and then figure out how they fit into the bigger picture. That’s basically what we do with large images with $x$T.

In Conclusion

For a complete treatment of this work, please check out the paper on arXiv. The project page contains a link to our released code and weights. If you find the work useful, please cite it as below:

@article{xTLargeImageModeling,

title={xT: Nested Tokenization for Larger Context in Large Images},

author={Gupta, Ritwik and Li, Shufan and Zhu, Tyler and Malik, Jitendra and Darrell, Trevor and Mangalam, Karttikeya},

journal={arXiv preprint arXiv:2403.01915},

year={2024}

}