TLDR: Discover the new concept of asymmetric certified robustness, offering certified protection for one class while reflecting real-world adversarial scenarios. Our feature-convex classifiers provide closed-form and deterministic certified radii within milliseconds.

Figure 1. Illustration of feature-convex classifiers and their certification for sensitive-class inputs. Learn how this architecture can significantly enhance certified robustness for specific classes.

Deep learning classifiers are highly vulnerable to adversarial examples, posing a significant challenge for machine learning reliability. Our focus on certifiably robust classifiers offers a mathematical guarantee for consistent predictions within a defined norm around an input.

Existing certified robustness methods have limitations like nondeterminism and slow execution. By refining the certified robustness problem to align with real-world adversarial settings, we introduce the concept of asymmetric certified robustness.

The Asymmetric Certified Robustness Problem

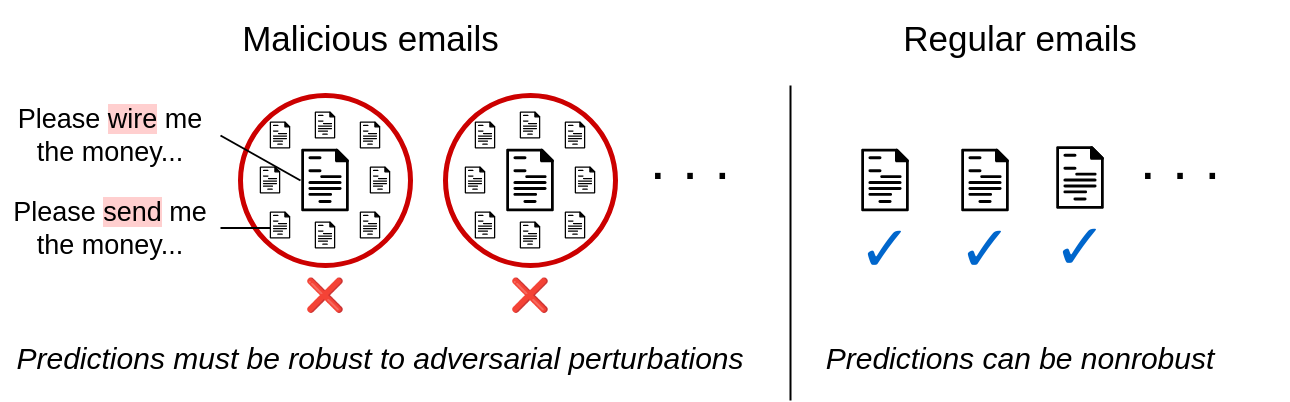

Asymmetric certified robustness focuses on providing certified predictions for inputs in a sensitive class while maintaining high clean accuracy for other inputs. This tailored approach is vital for scenarios like email filtering, malware detection, and financial fraud prevention.

Figure 2. Asymmetric robustness in email filtering. Explore how the concept of asymmetric robustness applies to practical adversarial settings.

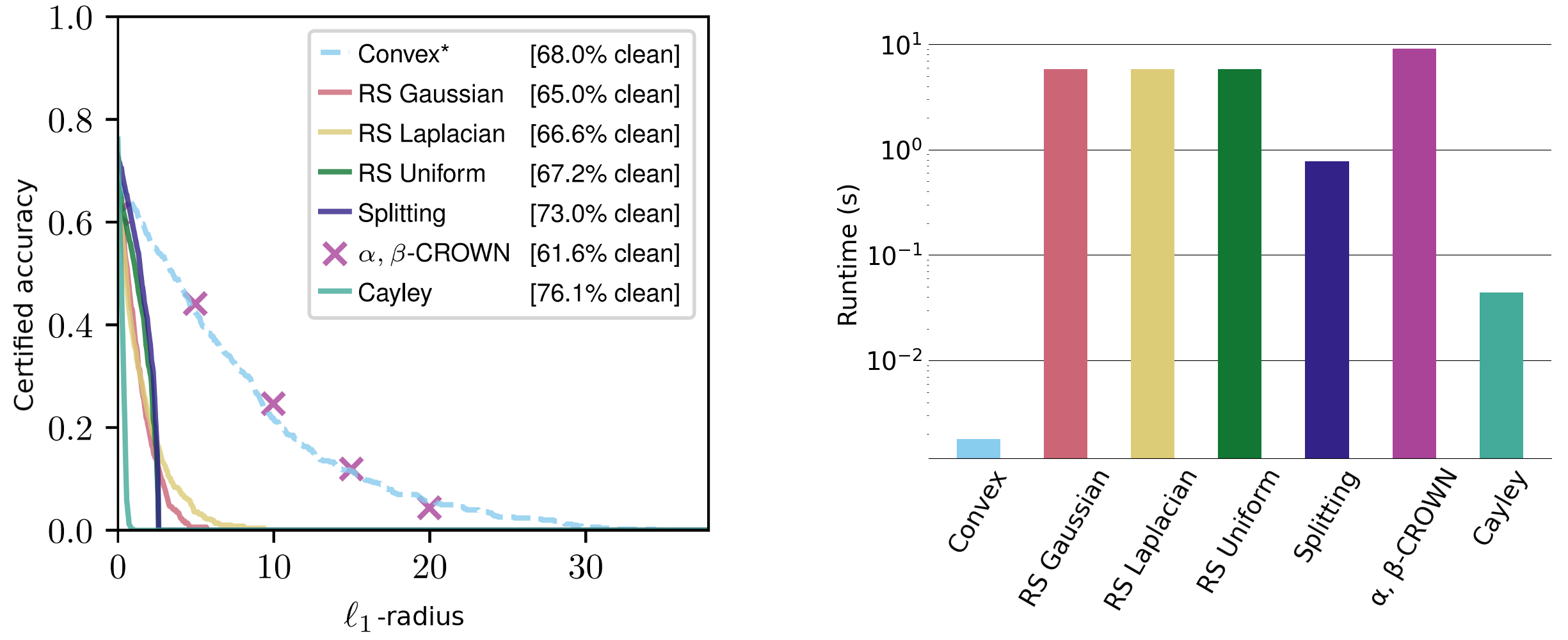

We introduce feature-convex neural networks to address the asymmetric robustness problem efficiently. This architecture combines a feature map with learned Input-Convex Neural Networks to provide fast computation of certified radii specifically for the sensitive class.

Feature-Convex Classifiers

Our feature-convex classifiers offer an elegant and scalable solution for computing certified radii for any $\ell_p$-norm. The architecture’s design allows for deterministic and closed-form certificates, calculated within milliseconds per input.

Figure 3. Discover the application of sensitive class certified radii on the CIFAR-10 dataset. Learn how our architecture provides fast and accurate certified radii for various norms.

These certificates are applicable across different norm types and ensure fast, deterministic computation even for larger networks. This stands in contrast to current methods that require longer runtimes and are often nondeterministic.

Theoretical Promise

Our theoretical work shows the untapped potential of feature-convex neural networks, even without a feature map. While initial results are promising, there is still room for improvement in achieving perfect training accuracy, particularly in binary ICNNs.

We aim to explore the theoretical capability of ICNNs to overfit training datasets, presenting a unique challenge in the field.

Conclusion

Our work on asymmetric certified robustness and feature-convex classifiers opens up new possibilities for enhancing certification in focused adversarial settings. The fast and deterministic certified radii provided by our architecture demonstrate its practical value in various applications.

For more details, you can read our research paper here, authored by Samuel Pfrommer, Brendon G. Anderson, Julien Piet, and Somayeh Sojoudi, presented at the 37th Conference on Neural Information Processing Systems (NeurIPS 2023).

For further information, visit the arXiv page and explore our project on GitHub.

@inproceedings{

pfrommer2023asymmetric,

title={Asymmetric Certified Robustness via Feature-Convex Neural Networks},

author={Samuel Pfrommer and Brendon G. Anderson and Julien Piet and Somayeh Sojoudi},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023}

}